A conversation with your architecture

How I’m using generative AI to understand diagrams, gain insights, make decisions, generate diagrams, and even generate infrastructure code

Over the course of my career I have worked on a number of sprawling software systems. One tool that has been invaluable during my time as a software engineer is the use of software and architecture diagrams. But one pain point I always have with diagrams is that they are almost always complex, with a lot of detail, and coming into an existing system I need to be able to get up to speed quickly. In this article, I will show you the value of software and architecture diagrams, challenges we face with them, and how I’m using generative AI to better understand diagrams, gain deeper insights, make decisions, generate diagrams, and even generate infrastructure code.

Let’s get started.

Why diagrams are important

Software and architecture diagrams are critical tools as they help developers and stakeholders understand complex systems.

A diagram can help bridge the gap between technical and non-technical stakeholders. With a common visual language, a diagram can communicate complex relationships and details better than pages of text can. They can help us see the big picture so we can better understand how various parts of a system work together or what will be impacted with changes.

All of this helps us plan for the future, identify risks and dependencies, and make informed decisions.

Different types of diagrams also have different purposes. With class diagrams you have a blueprint for implementation and can visualize inheritance and composition relationships better. Sequence diagrams help you understand the flow and how objects interact over time.

Together, the various types of diagrams give a comprehensive view of a system. But they can also pose some challenges

Challenges with diagrams

There are a number of challenges with software and architecture diagrams but a couple that are top of mind for me are how quickly they can become complex and how easily they become out of date.

It’s important to make sure your diagram has the right level of detail for its purpose and audience. Too much detail and they become overwhelming. Too little detail and you don’t have the right information to make a decision. It is a fine balance and takes practice.

Diagrams are also as of a point in time and if they are not maintained over time, they won’t reflect reality, making it harder to get accurate insights and make decisions.

There are approaches and tools to manage some of these challenges but today I want to show how using generative AI can help you to better understand diagrams, gain deeper insights, make decisions, generate diagrams, and even generate infrastructure code.

Let's have a conversation with our architecture

What if we could have a conversation with our diagrams? What if we could understand it quicker, get custom recommendations for our system, use real-time data and best practices from our company, and even generate new and updated diagrams?

We can.

I’m going to show you how using a demo app I built with Python using the Amazon Bedrock Converse API. This will let us carry out a conversation, back and forth, between a large language model and the user. We’ll incorporate tool use to get access to real-time data and a knowledge base to pull in data from our company’s custom best practices.

Try it yourself

If you’d like to grab the code and run through this yourself with your own diagram or see how it was implemented, you can get it here. Clone the repo, make sure you're set up with an AWS account, have access to the Claude Sonnet 2.5 model on Amazon Bedrock, and have Python 3.10.16 or later installed.

A simple serverless example

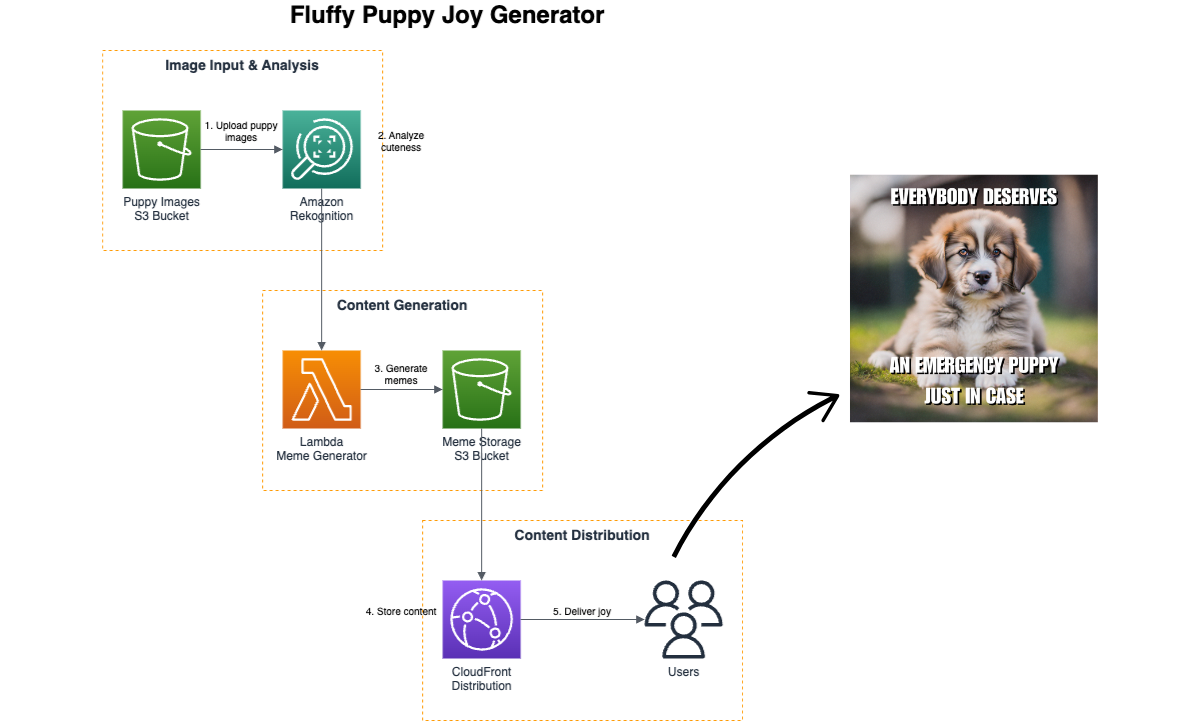

We’ll start with this diagram that visualizes a simple serverless application that generates joy from fluffy puppy memes. Very important business!

This app runs at the command line. Once we’ve started it up, we enter the diagram we want to analyze and our first query:

List the AWS Services used in the architecture diagram by official AWS name and excluding any sub-titles.

And the output:

Certainly! I'll analyze the architecture diagram in the image "demo/fluffy-puppy-joy-generator.png" and list the AWS services used by their official AWS names, excluding any subtitles. Here's the list:

1. Amazon S3 (Simple Storage Service)

2. Amazon Rekognition

3. AWS Lambda

4. Amazon CloudFront

These are the four distinct AWS services that are clearly identifiable in the architecture diagram for the Fluffy Puppy Joy Generator application.

We could then ask follow up questions like:

- What are the recommended strategies for unit testing this architecture?

- How well does this architecture adhere to the AWS Well Architected Framework?

- What improvements should be made to the resiliency of this architecture?

- Convert the data flow from this architecture into a Mermaid formatted sequence diagram.

- What are the quotas or limits in this architecture?

- Can you generate the CloudFormation code to provision this architecture?

This approach uses the Amazon Bedrock Converse API to have a conversation with an LLM. This API maintains the chat history as context for the future conversation. You can read more about how this works in my past blog post here. This conversation is limited to the training data for the model we have selected (in this case, Claude Sonnet 3.5).

Retrieving real-time data with a tool

But what if we wanted to ask more specific questions about this system? For instance, what if we wanted to know how much joy is being generated by this system? We could ask:

What is the joy count of the current system?

In order to support questions like this that get at real-time data, we'll need to use a tool with Amazon Bedrock. In this app, we have a tool called joy_count_tool.py that can retrieve the current, up-to-date joy count from the system via an API (our implementation retrieves it from a JSON file for simplicity). During the conversation, the model detects that a tool is needed, replies back to our app with which specific tool to use, and then our app uses the tool to retrieve the joy count. This data is then passed back to the model and a natural language response incorporating the joy count is returned to our app. You can read more about how tool use works with Amazon Bedrock in my past blog post here.

Incorporating custom data with an Amazon Bedrock Knowledge Base

Knowing the joy count is helpful, but how do we even know if this number is good or bad? How do we know if it adheres to our company's best practices? We could ask:

Is a joy count of 189 good or bad?

With an output:

Thank you for providing that information. Now I can assess whether the current joy count is good or bad based on the company's best practices.

According to the best practices for the Fluffy Puppy Joy Generator system, the joy count is evaluated on the following scale:

- Joy Count 1-100: Bad

- Joy Count 101-200: OK

- Joy Count 201-300: Good

- Joy Count 301-400: Better

- Joy Count 401+: Best

The current joy count of 189 falls within the "OK" range (101-200). This means that the application is generating some joy, but there is still room for improvement.

To elaborate:

1. The system is performing adequately, as it's not in the "Bad" category.

2. However, it hasn't yet reached the "Good" level, which starts at 201.

3. There's significant potential for enhancement to move into the higher categories.

The best practices suggest that when the joy count is in this range, more work is required to improve the experience. This could involve:

1. Refining the meme generation algorithms

2. Improving content selection

3. Optimizing distribution strategies

To boost the joy count and move into the "Good" or better categories, the team should focus on these areas of improvement. Regular reviews of the joy count and implementing updates to the system components are recommended to maintain and increase the level of joy generation.

In summary, while the current joy count of 189 is not bad, it indicates that the Fluffy Puppy Joy Generator has room for improvement to reach its full potential in spreading puppy-related joy to users.

To support a question like this, we use an Amazon Bedrock Knowledge Base to provide contextual information to the model. In this case, we have a knowledge base using an Amazon S3 bucket as a data source. This bucket contains data about our company's best practices. We use the Amazon Titan Text Embeddings v2 model with an Amazon OpenSearch Serverless vector store. You can read more about setting up an Amazon Bedrock Knowledge Base in my coworker's blog post here.

This may be a trivial example, but this means we can bring real-time data into our app and gather insights based on our own internal data, like our company's best practices or policies and then generate a natural language response incorporating that data. Then we could ask questions like:

- Are all our AWS resources properly configured according to our company's compliance requirements?

- Does this architecture adhere to our company best practices?

- Does this class diagram adhere to our naming best practices?

- Where are the current bottlenecks in this system?

Again, the Fluffy Puppy Joy Generator is only a trivial example but using generative AI as another tool in our toolbox with diagrams opens up more possibilities for visualizing our systems, making decisions, implementation, and getting customers using our software earlier.

Where we go from here

Now that we’ve covered how generative AI can help with these challenges, I want to leave you with some hands-on assets to work with your own architecture diagrams.

- Watch episode 1 of the Conversation with your Architecture series. Episodes 2 and 3 will be out in January 2025.

- Get the code, tweak it to your use case or analyze your own diagram here.

- Work through the workshop, Harnessing generative AI to create and understand architecture diagrams that I co-led at re:Invent 2024.

- Read how Olivier Lemaitre, AWS User Group leader, goes from diagram to code with Amazon Q Developer.

I hope this has been helpful. If you'd like more like this, smash that like button 👍, share this with your friends 👯, or drop a comment below 💬 about how you're using generative AI with diagrams. You can also find my other content like this on Instagram, LinkedIn, and YouTube.